Extrapolation of radar echo with neural networks

About ideas about how to predict precipitation from the weather radar images using machine learning.

As one of the young guns in Meteopress, I joined the team in March 2019 to write my bachelor’s thesis here. Under the supervision of Jakub Bartel, I have been exploring the use of neural networks for extrapolation of radar echo for the past few months.

And the results? They are quite promising.

Weather Nowcasting

The way we communicate has dramatically changed in the last two decades. From reading newspapers, listening to the radio and watching TV to find out what is happening outside (and of course, what will the weather be like) we have advanced to using the internet as our main source of information. Moreover, thanks to mobile devices and cellular data connections, it is now possible for a broad public to access information in real-time.

This revolution creates opportunities for new weather forecasts and new applications of them. We capture a vast amount of high-density weather data. When precisely processed and published in real-time, it is possible to warn people against severe weather to protect their lives and property. Wind and solar power plants can be made more effective, air-traffic can become even safer, etc.

It is a while now since the meteorological community has started to talk about this and called it nowcasting.

Nowcasting is forecasting with local detail, by any method, over a period from the present to 6 hours ahead, including a detailed description of the present weather.

In Meteopress, we are implementing and experimenting with various types of nowcasting methods. On our website, we display the precipitation in real-time captured by weather radars. Cooperating with an insurance company, we warn people via text messages, push notifications, and wallet card notifications about incoming severe weather (hail, storms,…) to protect their property. The junior development team Ostrava is finishing the work on COTREC method for radar echo extrapolation. We see endless possibilities here, and we have bold plans for the future.

Radar Echo Extrapolation

Extrapolation, in general, is a process of estimating new data beyond the original range of observation.

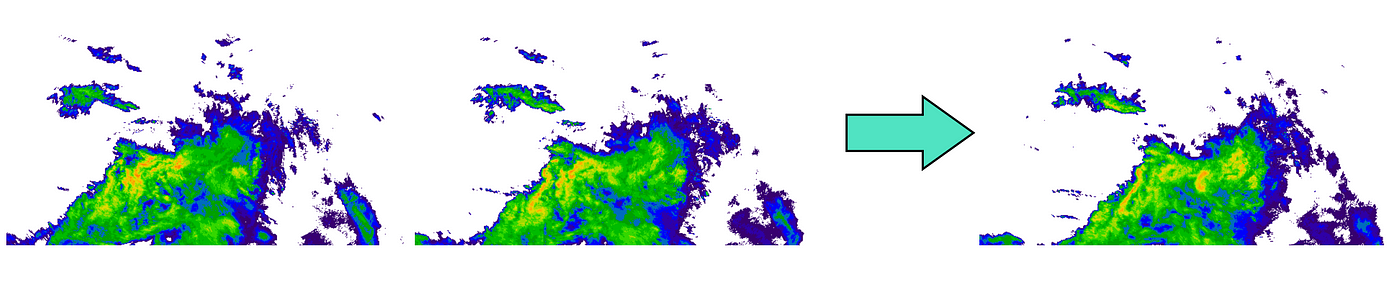

In our case, we are working with sequences of weather radar images. Radar echo is the signal reflected to radar by an object (precipitation), and the intensity is displayed in the images in color. The goal is to predict future ones that fit into a sequence of observed.

The Idea to Use Neural Network

The extrapolation by the COTREC method can capture well the motion of a radar echo. However, the drawback is that the extrapolated image is created by shifting the whole tiles of the input image according to obtained motion vectors. Naturally, such a method can not capture growth or decay of precipitation intensity or subtle changes of radar echo structure.

Some interesting work has been conducted in the field of video frame interpolation and extrapolation using convolutional neural networks in recent years. If you are interested in some further reading, Long et al. were first to do so in 2016. A method currently achieving state-of-the-art results for extrapolation is described by Reda et al. from 2018.

Our motivation to try these machine learning methods is to find one that excels in conditions where the COTREC stays behind.

Architecture

After experiments, we decided to go with a common convolutional network for its satisfying combination of simplicity and performance. You can read more about convolutional neural networks in the Stanford CS231n class notes.

We trained the network to predict the following image from a sequence of consecutive weather radar images. It takes three concatenated images on the input and predicts the fourth on the output.

The network features convolutional blocks consisting of three consecutive convolutional layers with filters of size (3,3) and the rest of the layers use (2,2) filters. Upsampling block is a combination of bilinear interpolation followed by a convolutional layer. Residual connections are incorporated to propagate fine details from input to output. Finally, the PReLU is used as the activation function.

Loss Function

Loss function in a neural network is used to compare the output with the desired ground truth (data measured by radar) and based on the results drive the training of the network. We have experimented with a perceptual loss function in addition to a traditional per-pixel L1 comparison. Such a loss should compare the images similar to a way humans compare them.

We have got desirable results using the combination of L1 and a perceptual loss based on the relu2_2 layer of the famous VGG19 network for image classification.

Data

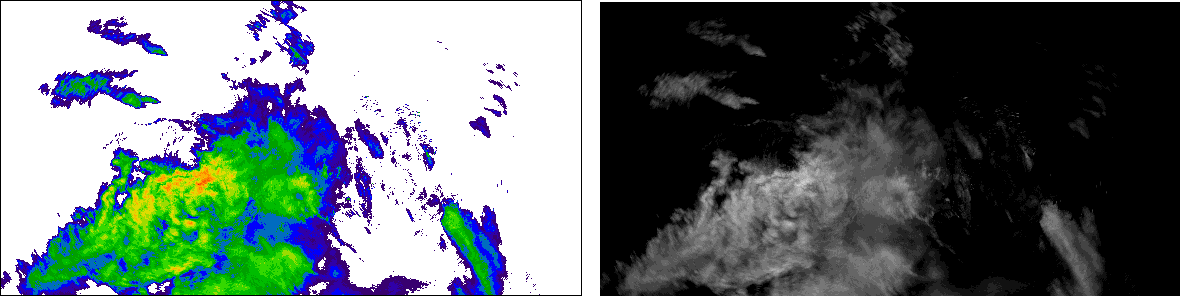

We are still in a concept phase, thus because of the computational reasons (it takes quite a long time to train a neural network) we have worked with smaller images of size 96x96 pixels. The original size of the frames was 580x294, and we took ten months of the radar data.

The creation of the dataset started with converting the images into grayscale, to represent the precipitation intensity only with one integer per pixel instead of three (RGB images are coded with one integer per each of three colors).

Afterward, we cropped 55 patches of size 96x96 from each of these images. The resulting sequences were cleared from the ones with no, or a little of precipitation (working with weather radars, you sometimes hope it would rain all the time). Sequences of four consecutive frames were extracted from them, and we ended up with 105647 samples in the dataset.

Experiments

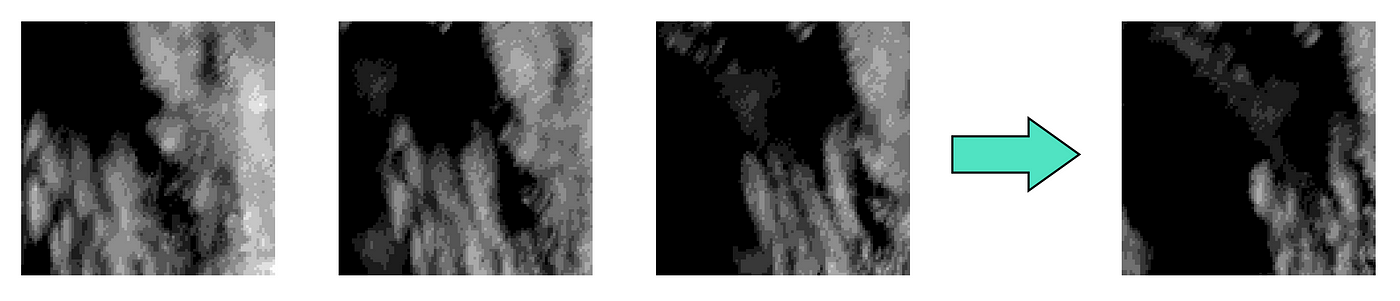

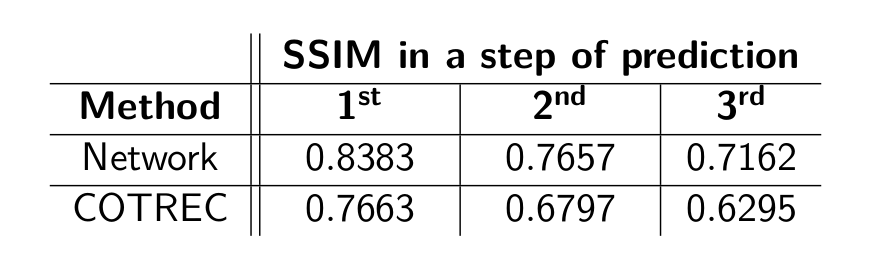

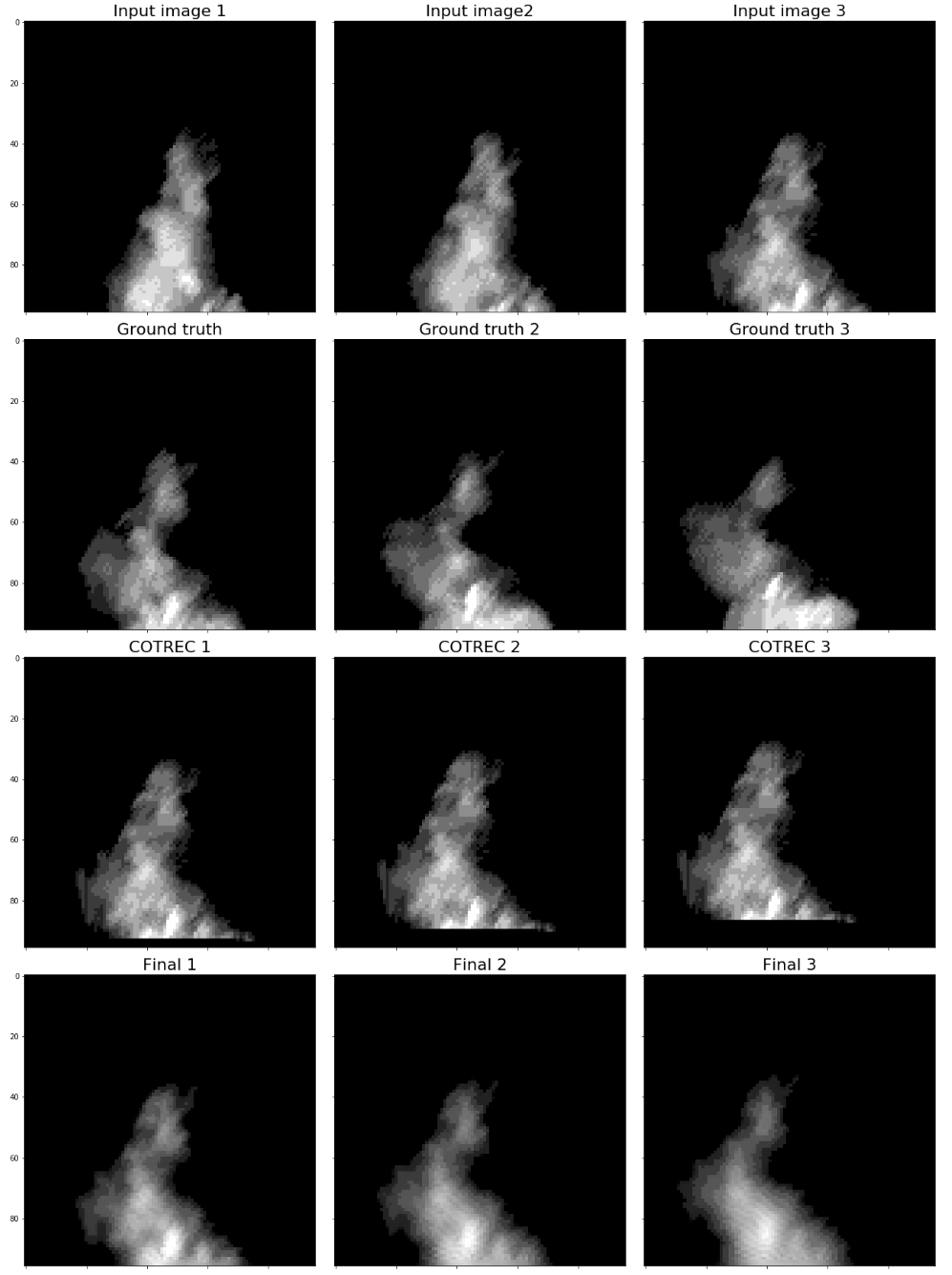

We have compared outputs of the trained network and the COTREC predictions to the ground truth in three steps of prediction. To obtain further extrapolations into the future using the network, it takes a sequence also containing its outputs as an input. As a comparison metric between the prediction and the ground truth, we have used the SSIM (structural similarity) index.

Little surprisingly the network soundly outperformed COTREC in each step of prediction. In the example below, it can be seen that in a setting with a change of structure rather than the motion of the radar echo, the network does a great job — which is what we were looking for exactly. It has to be noted though that the network outputs lack a fair amount of details.

Further, into the future, the value of SSIM is decreasing. This behavior is expected as any error introduced by the methods will scale up in time.

Next Steps

Driven by the success of the discussed solution, we have decided to go on with the research of machine learning methods for radar echo extrapolation. Currently, we are working on the proposed network, to make it work on larger images and for longer-term predictions. Once ready, these nowcasts will be displayed on our website alongside the weather radar measurements.

To improve its performance, we are experimenting with adding various other meteorological data as input and exploring even more loss functions. In terms of different architecture, maybe convolutional LSTMs or GANs? We shall see.

New methods of weather forecasting are here, and they are exciting. Thank you for reading until the end and stay tuned to see what will the future bring.